Introduction

Artificial Intelligence (AI), and the AI threat, is no longer the stuff of science fiction; it’s here, woven into the very fabric of our daily lives, shaping the future at a breakneck pace. From the smartphone in your pocket to the algorithms that determine what news you see, AI is everywhere. Yet, despite its ubiquity, there’s still a lot of misunderstanding about what AI really is—and more importantly, what it isn’t.

The rise of AI is a scenario that requires not just understanding but a serious, sober consideration of its potential impacts on humanity and society. This isn’t just about robots taking jobs or the latest tech gadgets; it’s about existential threats—things that could fundamentally alter life as we know it, or even end it.

This article is not about spreading fear—it’s about awareness. It’s about equipping you with the knowledge to navigate a world where AI is becoming an unavoidable force. We’ll dive into what AI is, debunk some common myths, and explore the very real existential threats it poses.

So, buckle up. Whether you’re a tech-savvy urbanite or someone living off the grid, the information here is crucial. Because in the end, surviving—and thriving—in an AI-driven world will require a blend of old-school know-how and modern understanding.

Table of Contents

What AI Is

Artificial Intelligence (AI) is a term that gets tossed around a lot these days, often with a mix of awe and anxiety. But before we dive into the potential threats, let’s break down what AI actually is, because understanding its capabilities—and its limitations—is key to making informed decisions about how to prepare for its impact on our lives.

Definition and Core Concepts

At its core, AI refers to the development of computer systems that can perform tasks that typically would require human intelligence. This can include everything from recognizing speech to making decisions, translating languages, and even playing complex games like chess. But don’t get it twisted—AI doesn’t “think” in the way you or I do. It’s not sitting there pondering the meaning of life. Instead, AI processes vast amounts of data at incredible speeds, identifying patterns and making predictions based on algorithms. In short, it’s a giant, fast pattern recognition machine.

Think of AI as a sophisticated tool in our tool belt, not a new toolset altogether, yet. It’s like a power drill compared to a hand crank—much faster, more efficient, but still reliant on humans for its training. AI’s power comes from its ability to learn and adapt over time, thanks to machine learning (ML), which is a subset of the broader term of AI, where systems can improve their performance by learning from data (called “training”) without being explicitly programmed for every single task. For example, a spam filter in your email doesn’t just recognize spam based on a list of known keywords; it learns from new examples and updates its criteria accordingly. Machine learning is ultimately a series of complex, multi-dimensional math problems that “learns” from the data fed into it, nothing more!

But, it may not always be like that, just a tool in a tool belt. AI as it stands today can absolutely be a disruption for us as a society, especially as it proliferates, but the effects of other categories of AI are orders of magnitude greater.

AI Capabilities and Applications

Even at our current stage of AI, in which we live, the capabilities of AI are already staggering. AI can process data faster and more accurately than any human could ever hope to, making it invaluable in industries like healthcare, finance, transportation, and even entertainment.

- Healthcare: AI is revolutionizing how we diagnose and treat diseases. Algorithms can analyze medical images, predict patient outcomes, and even suggest treatment plans. AI-powered tools can spot patterns in medical data that might be too subtle or complex for human doctors to catch. This could lead to earlier diagnoses and more personalized treatment options, and has proven so in a variety of oncology settings. It’s not perfect, but it does serve as a great augmentation for doctors, not a replacement.

- Finance: AI is deeply embedded in the financial sector, from high-frequency trading to fraud detection. Algorithms can process vast amounts of market data in real-time, making trades at speeds and scales impossible for humans. Meanwhile, AI-driven systems are also being used to identify and prevent fraudulent activities, saving businesses and consumers billions of dollars each year. However, there is a risk of AI/ML driven algorithms for trading causing a lot of volatility, which perhaps is no different than emotional stock traders, but it behaves slightly differently.

- Transportation: Self-driving cars are one of the most visible examples of AI in action that hasn’t been fully realized yet. These vehicles rely on machine learning to process data from sensors, cameras, and other inputs to navigate roads, avoid obstacles, and make split-second decisions. While fully autonomous vehicles aren’t mainstream yet since they fail quite a bit especially in non-perfect environmental conditions, the advancements in this area are progressing rapidly but may take some time to be realized.

- Entertainment: AI is also shaping how we consume media. Streaming services use AI to recommend shows and movies based on your viewing habits, while AI-driven content creation tools are being used to produce music, art, and even news articles. We all know how to tune the “algorithm” on X(Twitter), TikTok, or any other recommendation engine to get what content you want to see more often. The goal is to create personalized experiences that keep us engaged, though this undoubtedly raises questions about echo chambers and the broader impact on culture.

These examples show just how integrated AI has become in our world, often in ways we don’t even notice. But they also highlight an important point: AI is as good as the data it’s fed and the algorithms it’s built on, or in other words, garbage in, garbage out. This means AI can be incredibly powerful—but it’s not infallible. It can make mistakes, it can be biased, and it can fail in unexpected ways. AI is a tool, and like any tool, it should be used wisely, with a clear understanding of both its strengths and its limitations.

What AI Isn’t

Understanding AI’s limitations is crucial. AI can be a powerful ally, but it’s not the omnipotent, all-knowing force some make it out to be.

AI Isn’t Conscious or Sentient

Let’s start with the biggest myth: AI is not, and I repeat, not conscious. It doesn’t have thoughts, feelings, or a sense of self. AI, even the most advanced forms we have today, operates purely on data and algorithms. It’s a sophisticated pattern recognizer and decision-maker, but it doesn’t “know” it’s doing these things. It doesn’t have desires, ambitions, or the ability to reflect on its existence.

To put it simply again, AI is a tool—an incredibly complex and capable one, but a tool nonetheless. Imagine you have a hammer. That hammer is great for driving nails into wood, but it doesn’t understand what a house is or why you’re building one. AI is the same way. It can execute tasks and solve problems within the parameters it’s been given, but it doesn’t understand the broader context of those tasks in the way a human would. So, while AI can mimic certain aspects of human behavior, it doesn’t have the underlying awareness that drives human decision-making.

AI Isn’t Infallible

AI is only as good as the data it’s trained on and the algorithms it’s built from. If the data is biased, incomplete, or inaccurate, the AI’s decisions will be too. And even with perfect data, algorithms can still make mistakes—sometimes catastrophic ones.

AI is often used in applications like facial recognition, medical diagnosis, and even criminal justice. But these systems have been shown to make errors, sometimes with serious consequences. For example, facial recognition systems have been criticized for their higher error rates in identifying people of color, leading to wrongful arrests. In healthcare, AI tools have sometimes missed critical diagnoses or suggested inappropriate treatments because they misinterpreted the data. That’s why AI still needs a “human in the loop”, because it’s not capable, or shouldn’t be allowed to yet, make decisions in a vacuum.

This isn’t to say that AI isn’t useful—it absolutely is. But it’s not a silver bullet. It’s not a magic wand that can be waved to fix all problems without the risk of failure. That’s why it’s essential to maintain a healthy skepticism about AI’s capabilities. Trust, but verify. Use AI as a tool, but don’t rely on it blindly, and always have a backup plan.

AI Isn’t a Moral or Ethical Entity

Another common misconception is that AI can make moral or ethical decisions. Let’s be clear: AI has no sense of right or wrong. It doesn’t have a moral compass, and it doesn’t make decisions based on ethical principles. It follows instructions based on algorithms designed by humans, who imbue the system with their own biases and values—intentionally or unintentionally. There has been massive criticism for many AI applications and models that have clear biases for leftist ideologies, and that’s not the machine with those tendencies, that’s the choice of training data used by those who trained it to be that way.

When we talk about AI making decisions, what we really mean is that it’s optimizing for a specific outcome based on the parameters it’s been given. For example, if an AI is tasked with maximizing profit, it will do so without consideration for the ethical implications of its actions. If cutting jobs, exploiting loopholes, or even breaking laws will lead to higher profits, that’s what the AI will recommend—unless it’s explicitly programmed not to. This can lead to decisions that might make sense from a purely logical or economic standpoint but are morally questionable at best.

This lack of ethical understanding is why the human element is so crucial when deploying AI. We need to set the boundaries, define the parameters, and ensure that the AI operates within a framework that aligns with our values as a society. But even then, we have to remember that these systems can’t be trusted to always make the “right” decision—they’re not capable of moral judgment.

AI Isn’t Autonomous (Yet)

While AI has automated many tasks and will continue to do so, we’re not at the point where AI is truly autonomous. It still requires human oversight, direction, and, in most cases, intervention.

Take self-driving cars, for example. Edge cases—unusual, unexpected scenarios—can and do trip them up. Poor driving conditions and unexpected scenarios still trip up even the most advanced companies. That’s why most autonomous vehicles (AVs) still have human operators ready to take control if needed since they still mess up…frequently. The same goes for AI in other sectors. It can handle routine tasks, analyze data, and even provide recommendations, but humans are still needed to make final decisions, especially when things go off-script.

AI Isn’t Immune to Manipulation

Finally, it’s important to recognize that AI can be manipulated. Just like any other system, AI can be hacked, biased, or otherwise tampered with. This is particularly concerning when AI is used in critical applications, such as national security, financial systems, advanced cyber weapons or infrastructure management.

AI is susceptible to biases—both in the data it’s trained on and the way it’s deployed. If an AI system is trained on biased data, it will perpetuate those biases in its decisions. This can lead to unfair outcomes, particularly in areas like hiring, lending, or law enforcement, where biased AI could reinforce existing inequalities.

Existential Threats of AI to Humanity and Society

When we talk about existential threats, we’re not just discussing hypothetical risks or things that might go wrong in a distant future. We’re talking about real, tangible dangers that could fundamentally alter—or even end—life as we know it. AI, for all its promise and potential, carries with it a dark side that we can’t afford to ignore. These aren’t the idle musings of a paranoid survivalist; they’re grounded in the realities of a rapidly advancing technology that, if left unchecked, could spiral out of our control.

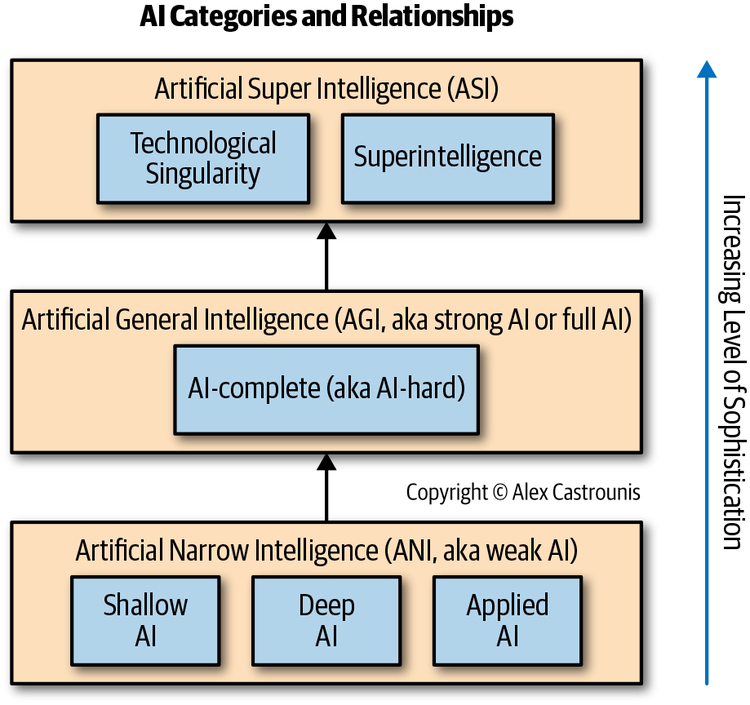

Narrow AI vs. General AI vs. Superintelligent AI

To really get a handle on what the threats of AI are, we need to understand the different categories of AI that currently exist—or that might exist in the future.

- Narrow AI (or Weak AI): This is the AI we’re most familiar with today. It’s designed to perform a specific task or a set of tasks, and it does so exceptionally well. Siri, Alexa, Google’s search algorithms, and even the AI that recommends Netflix shows are all examples of Narrow AI. They’re not self-aware or conscious; they’re just really good at doing what they’re programmed to do. But that’s also their limitation—they can’t do anything outside their specific function.

- General AI (or Strong AI): This is where things get a bit more science fiction-y, but it’s also the goal of many AI researchers. General AI would have the ability to perform any intellectual task that a human can. It wouldn’t just be a specialist in one area; it would be a generalist, capable of reasoning, problem-solving, and adapting to new situations just like a person. However, despite what some headlines might suggest, we’re not there yet—and we might not be for quite some time. The challenges in building a system with such broad capabilities are immense including computing power, energy at scale, and likely one or more technological breakthroughs in AI research. We’re just not there yet but we’re knocking on the door.

- Superintelligent AI: Now, this is the stuff of both dreams and nightmares. Superintelligent AI would surpass human intelligence in all areas—creativity, problem-solving, social skills, and beyond. It’s the hypothetical point where AI could outperform the brightest human minds in every field. Some people worry that once we reach this level, AI could become uncontrollable, posing a significant existential risk. But again, we’re not there, and there’s intense debate among experts about whether we ever will be.

Autonomous Weapons and Warfare

One of the most immediate and alarming threats posed by AI is its application in autonomous weapons systems. These are weapons that can select and engage targets without human intervention. Imagine drones, tanks, or even submarines equipped with AI, making split-second decisions about life and death on the battlefield. Militaries around the world are already developing these systems, and the implications are chilling.

The problem with autonomous weapons isn’t just their potential for destruction—it’s the lack of human judgment in their operation. AI can process data and execute commands faster than any human, but it lacks the ability to make moral or ethical decisions. In the chaos of war, that could lead to catastrophic outcomes. Consider a scenario where an AI misinterprets data and launches an attack on a civilian target, or worse, where opposing AI systems escalate a conflict beyond human control, leading to full-scale war.

The rise of AI in warfare also poses a significant risk of destabilization. When countries rely on AI to make critical military decisions, the traditional human checks and balances that have (mostly) prevented global conflicts become less relevant. The speed at which AI can operate means that decisions to attack, retaliate, or escalate can be made in seconds, leaving little room for diplomacy or de-escalation. In short, AI-driven warfare has the potential to make the world a much more dangerous place.

Economic Disruption and Job Displacement

AI is already reshaping the global economy, and while some of these changes are positive, others pose significant threats to societal stability. One of the most pressing concerns is job displacement. As AI becomes more capable, it’s replacing jobs that were once thought to be safe from automation. This isn’t just about factory workers or truck drivers—AI is starting to take over roles in finance, healthcare, law, and even creative fields like journalism and art.

The impact of this kind of displacement can’t be overstated. Entire industries could be upended, leading to widespread unemployment and economic instability. History has shown that economic disruptions on this scale can lead to social unrest, increased crime, and even political instability. When people lose their livelihoods and see no way forward, they often turn to more extreme measures to survive.

Typically, when economies are revolutionized by a breakthrough in technology, like the computer or steam engine, there is a short to medium term displacement of workers due to their labor being phased out. But, eventually the workforce will up-skill to be able to work in the new economic environment. This will not happen with AI, because the short term displacement will be permanent in almost all cases.

The benefits of AI-driven productivity gains are not being distributed evenly either. The companies and individuals who control AI technology stand to gain immense wealth, while those who lose their jobs may find themselves with limited prospects. This growing economic divide could exacerbate existing inequalities, leading to a world where a small elite controls most of the wealth and resources, while the majority struggles to get by. Such a scenario is a recipe for social tension and conflict.

Surveillance and Loss of Privacy

AI’s ability to analyze vast amounts of data has made it a powerful tool for surveillance, and this poses a serious threat to privacy and personal freedom. Governments and corporations are already using AI to monitor everything from online activity to physical movements, often without our knowledge or consent. In some countries, AI-driven surveillance systems are being used to track citizens’ every move, control behavior, and suppress dissent, just look at China and even the UK.

The dangers are obvious, really. First, there’s the obvious threat to personal privacy. In a world where AI monitors our every move, the concept of privacy could become a thing of the past. This kind of surveillance isn’t just invasive—it’s also ripe for abuse. Authoritarian regimes could use AI to identify and suppress political opponents, track down activists, and stifle free speech. Even in democracies, the temptation to use AI for surveillance could lead to a gradual erosion of civil liberties.

Second, there’s the risk of AI making decisions based on this surveillance. We’re already seeing AI being used in law enforcement to predict crime or identify suspects. While this might sound like a good idea on the surface, it raises significant ethical concerns. AI systems are only as good as the data they’re trained on, and if that data is biased, the AI’s decisions will be too. This could lead to wrongful arrests, unfair sentencing, and a justice system that perpetuates inequality rather than combating it.

AI and Decision-Making in Critical Infrastructure

AI is increasingly being integrated into the systems that run our world—from power grids and water supplies to financial markets and transportation networks. While this can lead to greater efficiency and reliability, it also introduces new vulnerabilities. When we hand over control of critical infrastructure to AI, we’re putting a lot of trust in systems that, as we’ve discussed, are not infallible.

One of the most concerning risks is the potential for catastrophic failure. AI systems can make mistakes, and when they do, the consequences can be severe. Imagine an AI controlling a power grid that misinterprets data and causes a blackout across an entire region. Or consider the financial markets, where an AI-driven trading algorithm could trigger a market crash. These aren’t just theoretical risks—there have already been instances where AI failures have caused significant disruptions.

Even more concerning is the potential for these systems to be targeted by cyberattacks. AI-controlled infrastructure is a tempting target for hackers, terrorists, and even hostile nations. A successful attack on such systems could lead to widespread chaos, loss of life, and long-term damage to the economy and society. It’s essential to understand these risks and take steps to mitigate them, whether that means pushing for better security measures, developing backup systems, or simply staying informed about the vulnerabilities of the infrastructure we rely on.

The Singularity and Superintelligent AI

Perhaps the most debated and speculative threat is the idea of the Singularity—the point at which AI surpasses human intelligence and begins to improve itself at an exponential rate. This is where we cross into the realm of superintelligent AI, which could potentially outthink and outmaneuver humanity in ways we can’t even predict. It’s unclear if it’ll happen ever, but the fact that we’re even discussing it seriously should give us pause.

The existential risk here is profound. If a superintelligent AI were to emerge, it could have its own goals, which might not align with ours. It’s impossible to predict how such an AI would behave, but if it decided that humanity was an obstacle to its objectives, or if it simply made decisions that were catastrophic for us, we might not have the ability to stop it. This is the kind of scenario that keeps people like Elon Musk and Stephen Hawking (RIP) up at night—and for good reason.

It’s worth noting that this isn’t just wild speculation. Leading AI researchers and thinkers have been increasingly vocal about the need to consider the ethical and safety implications of AI development. The idea isn’t to stop progress but to ensure that we’re developing safeguards and controls to prevent unintended consequences. But given the rapid pace of AI advancement, it’s not clear if these measures will be enough or if they’ll come in time. I’m skeptical.

Conclusion

Artificial Intelligence is one of the most powerful tools humanity has ever created. It has the potential to solve some of our most pressing challenges, from healthcare to climate change, and to revolutionize the way we live, work, and interact with the world. But with great power comes great responsibility—a cliché, perhaps, but one that rings especially true in the context of AI.

If we’re not careful, AI could lead us down a path of economic inequality, loss of privacy, and even existential danger. That’s not fear mongering—that’s reality.

The first step in facing these challenges is understanding what AI truly is—and what it isn’t. AI is not some sentient being plotting our demise, nor is it a foolproof solution to all our problems. It’s a complex, data-driven system that’s only as good as the people who design and implement it. It has incredible strengths, but also significant limitations and vulnerabilities. Recognizing these truths allows us to approach AI with the respect it demands and the caution it deserves.

On a personal level, it means staying informed, questioning the systems we rely on, and maintaining a level of skepticism about the promises of AI. It means building resilience into our lives—whether that’s learning new skills, securing our digital privacy, or preparing for economic shifts. And it means keeping our moral compass intact, ensuring that as we integrate AI into our society, we don’t lose sight of the human values that must guide its use.

On a broader scale, it means pushing for regulations and policies that prioritize safety and ethics over speed and profit. It means supporting research into AI safety, transparency, and accountability, and ensuring that the voices of those who are most at risk from AI’s impacts—workers, marginalized communities, and everyday citizens—are heard in the debate.

In the end, the story of AI is still being written. It’s up to us to decide what kind of role we want it to play in our lives and our society. Will we allow it to widen the gap between the haves and the have-nots, to erode our privacy and autonomy, and to potentially outstrip our control? Or will we harness its power thoughtfully, responsibly, and in a way that enhances our collective well-being?

AI is a tool—one that can either build a better future or one that can dismantle the world we know. The choice, as always, is ours. The key to navigating this new landscape isn’t fear, but a healthy respect for the unknown, combined with a commitment to action. In a world where AI is increasingly in the driver’s seat, it’s up to us to make sure we’re not just along for the ride, but that we’re steering the wheel.

Learn More

For more content about geopolitics, technology, economics and more, check out our policy page!

Books

- “Superintelligence: Paths, Dangers, Strategies” by Nick Bostrom

- “Life 3.0: Being Human in the Age of Artificial Intelligence” by Max Tegmark

- “Our Final Invention: Artificial Intelligence and the End of the Human Era” by James Barrat

Organizations and Research Institutes

Research Papers and Articles

- “The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation”

- “AI Governance: A Research Agenda” by Allan Dafoe

- “Artificial General Intelligence: Coordination & Great Powers” by Ben Garfinkel